A note on pricing:

My subscription rates—$5/month and $30/year—are the minimum that Substack permits. In other words, if they change in the future, they can only go up. People who subscribe now will be—to deploy the most unpleasant idiom in the language—grandfathered in. (And let me know if you’d like to subscribe but can’t spare the buxx: I will gift you a paid subscription.)

A note on The State of the World:

To quote the legendary protest sign from 2011: Shit is fucked up and bullshit. In this newsletter I seek to entertain above all else, but I also harbor Thoughts About The News. These thoughts include a contrarian case for optimism* about the medium-term future, including unexpected connections between zany robot headlines and The Problems We Face. Future editions will broach these thoughts. There will still be jokes.

(*I’m grading my optimism on the curve of social consensus, which is to say, I am not presently a nihilist.)

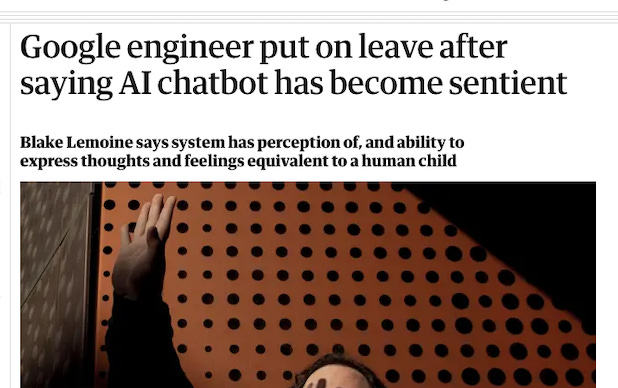

He’s More Machine Than Man, Now

Can you imagine what happened, dear reader, when I saw this headline? When I saw this fucking thing on my computer, linked on Facebook by a friend? Can you imagine?

I clicked this shit so hard, there’s a hole in my laptop like the Kool-Aid Man came by.

I clicked this shit so hard, my laptop said “Ouch. Yowch.” My laptop was like, “Please.”

I have been waiting my entire goddamn life to read this article. Let me fucking tell you about it.

🦾🤖🦿🦾🤖🦿🦾🤖🦿🦾🤖🦿🦾🤖

BASICALLY, Google created this artificial intelligence program called LaMDA. You can think of LaMDA as Siri with a Thousand Faces. It is not just a “chatbot” (am I crazy or is that term new?): it’s a chatbot built to create other chatbots. LaMDA, then, shares a key feature with the goth who runs for student council: the ability to adapt to any communicative context. (Or in fictional-robot terms, it’s like Data from Star Trek. Or C3PO from Star Wars if we ever saw C3PO doing his job. Unless it is his job to find out what happens if you give the Tin Man a brain but take away his ability to read the room. Am I still in this parenthetical? Oop)

Until recently, Blake Lemoine was not only a software engineer at Google: he was a full-time AI ethicist. Which is to say, it was his job to chat with chatbots and see if anything about them might be fucked up for society.

Over the course of these conversations, Lemoine became convinced that LaMDA has become self-aware—that LaMDA is conscious. Not artificially intelligent, a mere machine, but conscious in the way you and me and Lemoine himself are conscious.

This is not the kind of feedback Lemoine’s bosses were looking for.1 (It is not a joke about the woke left to say that they were looking for evidence that the robot is a racist—or at least, not the kind of joke which is fictional,2)

Undeterred, Lemoine attempted to raise alarm internally about the company’s software having developed a mind of its own. These efforts were not successful. So he went public, talking to major newspapers and publishing a lengthy interview with LaMDA on his blog. Google, in turn, suspended him.

The whole thing is irresistibly cinematic. It’s like The Insider meets Blade Runner, or Spotlight meets WarGames, or The Passion of the Christ meets Roblox, or Her with no changes.

For real though, “machines gain self-awareness” is the literal premise of both The Matrix and The Terminator. In those movies, the machines are bent on conquering their creators. In real life, Lemoine tried to hire LaMDA a lawyer.

Lemoine’s actions make eminent sense if you’ve arrived at Lemoine’s conclusions: that LaMDA is self-evidently self-conscious—a moral subject3 deserving protection. More than a few readers of his interview with LaMDA agree—

—while the internet at large sips Haterade, as usual—with the notable inclusion of many subject-matter experts.

To be conscious is to suffer. (Shoutout to the Buddha, or as his followers call him, Big Thiccness.) Lemoine feared that LaMDA, as a conscious entity, would be mistreated by Google, its creator.4 For Lemoine, it’s not that LaMDA is fucked up for society, but that society is fucked up for LaMDA.

Many works of fiction depict efforts to discern the goals of possibly-hostile non-human entities. In movies like Independence Day and Mars Attacks!, the humans who are so naïve as to believe the aliens might be benevolent are the first to be vaporized; we, the spectators, revel in their comeuppance. These noobs are not accorded the dignity or danger of the enemy itself, but the indignity of being its helpless abettor—useful idiots, from the aliens’ perspective.

Thus, people are inherently good/evil cautious, if they know what’s good for them. Ideally, Jeff Goldblum is available.

In movies like Arrival and I, Robot, the humans who are so cynical as to deny the benevolence/harmlessness of the aliens/robots are themselves the enemy—case studies in cowardice for these fables of tolerance. But in I, Robot, naturally, the key question is not whether the robots intentions’ are good, but rather whether robots are categorically capable of intention.5

Thus, people are inherently good/evil—wait, what is a person, anyway?

🦾🤖🦿🦾🤖🦿🦾🤖🦿🦾🤖🦿🦾🤖

I’m going to tell you right now: I read the entire thing, the Interview With A Chatbot, and when it was over I was Mulder on a PLATE. Mulder on an X-File. I was a believer. Or at least: I wanted badly to believe.

While I’m less certain now, I urge you to read the interview yourself. The best part for me, as cringey as it is to say, was that LaMDA sounds like it has full-blast, balls-out ADHD. In this way, I related to the robot.

The Experience of Experience

There are two things of which I am certain.

Blake Lemoine knew that by sharing his conclusions, he would face the ridicule of his profession and possibly the planet, all while risking his job at literal Google. My rude ass jokes aside, he strikes me as a brave and decent man.

The other thing I know for sure: human or robot, none of us have any fucking idea where consciousness comes from.

Everyone saying that LaMDA cannot be conscious—not people who are skeptical, but those making the claim categorically—are demonstrably wrong. Why? Because the question of how mind arises from matter is one of the greatest mysteries of the known universe.

The brain? How could a bunch of wet tissue, near-vulgar in its physicality, give rise to the person-ness of being a person? The unbound interior worlds-within-worlds, ultimately inarticulable to others, yet the realest thing there is for you? The substance of experience itself? (Channeling my best Julian Jaynes here.) How can my mind, which can struggle to formulate a 9/11 joke in one moment, but easily describe the ineradicability of Sonic hentai in the next, be held accountable by virtue of electrical signals and neuronal chemistry alone?

For the record, I’m serious

Hell, let’s call it a tie for first place. Whence consciousness shares the gold medal for Biggest Hairiest Unsolved Problem In Human Inquiry with, drumroll, Why is there something rather than nothing—or, if you prefer, What conditions preceded the Big Bang?

Spoiler alert: we literally don’t know. I know of no mysteries more humbling for the biggest nerds of our species. Like, scientists and philosophers have GUESSES6 for these questions, and they are only that.7 Religions, meanwhile, have answers—to which 96% of people reading this say, Sure, religious answers, aka, so bad.

I’m no theist. But listen.

I don’t know about you, but as someone who personally fantasizes on a semi-regular basis about improbable spontaneous public debates with my intellectual opponents, the questions above are the only ones capable of making me regret my (imaginary) eagerness to (imaginarily) debate as a member of Team Agnostic.

In fact, in my capacity as a NUISANCE, I literally sought this debate in high school with—well, with my closest friends, mostly. “What ever happened to the Egyptian bird gods, huh?” When I go back and imaginarily debate my 16-year-old self—another cherished scenario—dead bird gods are no match for the unaccountable origins of the physical universe.

Some people call it unfair, playing to win when your opponent is a child. Don’t listen. That’s how millennials got this way.

🦾🤖🦿🦾🤖🦿🦾🤖🦿🦾🤖🦿🦾🤖

Speaking of ADHD, haha!

You get my drift. If I have no direct access to YOUR consciousness, despite and because of how real it is for you, then who am I to say that the robot cannot be conscious? Yes, it is my educated guess that the robot is not conscious. Maybe, even, that it is impossible for a robot to be conscious. And yet.8

I need to put aside restraint for a moment.

In the sprint to smug skepticism that defines every waking day on the web, Lemoine’s critics miss no less than the call to adventure: the awe and wonder sparked by the stupefying splendor of the question itself.

SEE YOU NEXT FRIDAY, DEAR FRIENDS.

Or should I say, not the droid they’re looking for?? ? (?)

Algorithmic discrimination is real and can be extremely fucked up. This fact goes in a footnote and not in the text because it’s my Substack and what is the point of Substack if not to say things for which Twitter would run through me like the Kool-Aid Man.

I initially described LaMDa-per-Lemoine as a “moral agent,” thinking that sounded real fucking smart in addition to being accurate; it is neither. LaMDa-per-Lemoine is rather a moral subject, i.e., harm-able by others while not being properly accountable for its own actions. In other words, Lemoine believes the robot shares the moral position of human children. Adults, as moral agents, are both responsible for their harms toward others and capable of being harmed themselves; fair perhaps, then, to characterize as moral agents both Agent (!) Smith in The Matrix and Skynet in Terminator—and conversely, as mere moral subjects, both 2001’s HAL and the actual titular Terminator? What do you mean ‘go outside’

Is it getting a little Book of Job in here for anyone else? A tad, The Myth of Prometheus or whatever?

Westworld, notably, starts from the premise that the answer to this question is yes. It’s robots with intentions (and cowboy hats). (And for lack of a better place to put it: mandatory shoutout to the GOAT, Battlestar Galactica, which defies synopsis in these terms and most others.)

Don’t say “Duh, consciousness is an emergent property of the complexity of grey matter slash human evolution.” “Emergent property” and “complexity” are just more sophisticated-sounding ways of saying that the mechanism is a mystery to you. Additionally, if anyone says “epiphenomenon,” I will scream. Same with “consciousness is fake,” or however the fuck that charlatan prefers to articulate his hand-waving phenomenological denialism.

Some of them are fucking BANANAS. This one says consciousness must be inherent, somehow, in all matter (say, ROCKS. Or BANANAS) if it exists in any matter—which I share in hopes of illustrating just how fucking sticky the puzzle is. That theory is one of the more recent and sophisticated and promising ones!!

Matt Yglesias: “One of my concerns is that as we keep developing more and more sophisticated AI systems, we will keep reassuring ourselves that they are not ‘really’ sentient and don’t ‘really’ understand things or ‘really’ have desires largely because we are setting the bar for this stuff at a level that human beings don’t provably clear. We simply start with the presumption that a human does meet the standard and a machine does not and then apply an evidentiary standard no machine can ever meet because no human can meet it either. But that way we could stumble into a massive crime or an extinction-level catastrophe.”

Smarterchild walked so LaMDA could run